Outset OpenResearch update!

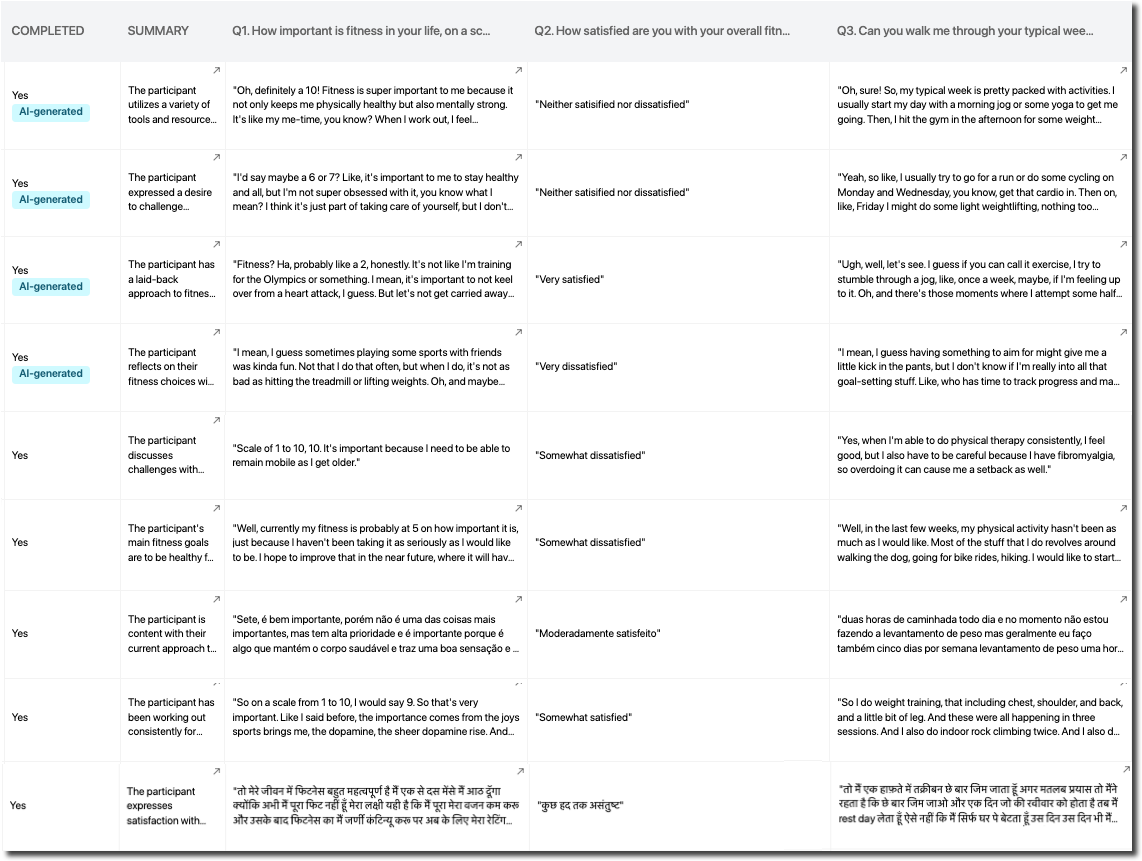

I’ve launched and collected 124 interviews across the US, India (in Hindi or English), and Brazil (in Brazilian Portuguese) on the topic of Fitness Goals & Routines and the role of wearable technology. Here are some observations so far:

Fast & easy to program the interview script - Enter the interview questions (multiple choice, numerical, or free-response), add moderator notes such as for specific probing topics or skip logic, indicate where more open-ended probing questions are appropriate.

Building my trust in their capable AI interviewer - I tested out my script with synthetic user responses, meaning the AI moderator interviewed AI respondents, while I sat back and watched! I reviewed the transcripts of the AI interviewer interviewing a synthetic user to see how it followed my instructions, handled question transitions and probing of different types, if it correctly followed skip logic, etc.

This built my confidence in the AI interviewers capabilities. I was skeptical that the AI would do as good a job as I would conducting the interviews. I was really impressed with the question transitions & natural flow of the conversation.

I was impressed. The AI moderator acknowledged when a topic had come up earlier in the interview. This is something even less-experienced human interviewers sometimes miss when they’re too focused on the script & not internalizing the answers. Not acknowledging when something has been previously mentioned breaks the interview flow and undermines the interviewees feeling of being heard or listened to.

Refinement before piloting! These transcripts allowed me to refine the flow of my interview discussion guide before piloting on real paid-for users.

Word of caution: This is particularly possible/viable because my target audience is the general population on a topic that synthetic users would have familiarity with - fitness is within the realm of their familiarity & understanding. I would be more careful/skeptical if my target users were subject-matter experts like neurosurgeons or were expected to have very specific or niche experiences that were unlikely to be in the synthetic user training data.

Allowed me to pre-test my reporting plan - I typically like to “start at the end” by considering my reporting plan. With Outset, I generated enough synthetic user responses to create a dummy report. This report wouldn’t have any real-user findings, but let me explore whether the interview guide gathered enough of the kinds of data I needed to report on the topics I intended to report on. This exercise highlighted where I needed to add an additional probing instruction for the AI moderator.

Accelerated my process - I was able to develop my questions, then code, pilot, review the 5 transcripts, and iterate on my interview script in roughly 2 hours.